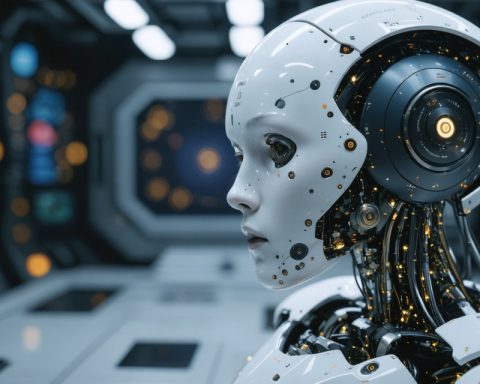

XAI

XAI, or Explainable Artificial Intelligence, refers to a set of methods and techniques in artificial intelligence (AI) that aim to make the operations and decisions of AI systems understandable to human users. The primary goal of XAI is to provide transparency in AI processes, allowing stakeholders to comprehend how models arrive at their decisions or predictions. This is particularly important in high-stakes domains such as healthcare, finance, and law, where the rationale behind AI decisions must be clear to ensure trust, accountability, and compliance with ethical standards.XAI seeks to address the "black box" nature of many AI models, especially deep learning algorithms, which often operate without providing insights into their internal workings. By employing various strategies, such as feature importance scores, decision trees, or rule-based explanations, XAI helps to demystify the decision-making process of AI systems, enabling users to interpret and evaluate model outputs effectively. Additionally, XAI fosters user acceptance and facilitates better collaboration between human analysts and AI systems, ensuring informed decision-making.

Promo Posts

The Axon-Flock Safety Split: A Shake-Up That Could Bring Hidden Gains

February 23, 2025

The Palantir Dilemma: Soaring Success or Too Hot to Handle?

March 16, 2025