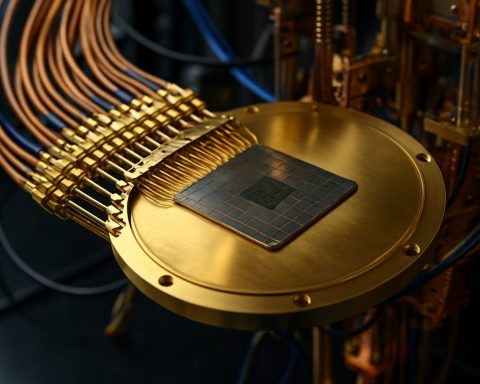

Quantum Computing

Quantum computing is a type of computation that utilizes the principles of quantum mechanics to process information. Unlike classical computers, which use bits as the smallest unit of data (represented as 0s or 1s), quantum computers use quantum bits, or qubits. Qubits can exist in multiple states simultaneously due to the phenomenon of superposition, enabling quantum computers to perform many calculations at once. Additionally, quantum entanglement allows qubits that are entangled to be correlated with one another, such that the state of one qubit can depend on the state of another, no matter the distance between them.These properties potentially allow quantum computers to solve certain complex problems much more efficiently than classical computers. Applications for quantum computing include cryptography, optimization problems, drug discovery, and simulating quantum systems. The development of practical quantum computers is still in the early stages, as challenges such as qubit coherence, error rates, and scaling up systems remain to be addressed.